Alessia Vignolo

Post doc

Istituto Italiano di Tecnologia

——————————————————————

Microsoft Teams Meeting link here

Updated program here

Registration link here

——————————————————————

Humans can easily collaborate with others, thanks to their capacity of understanding other agents and anticipate their actions. This possibility is given by the fact that humans are cognitive agents who combine sensory perception with internal models of the environment and people, and that they perceive other humans with the same features: this enables mutual understanding and coordination.

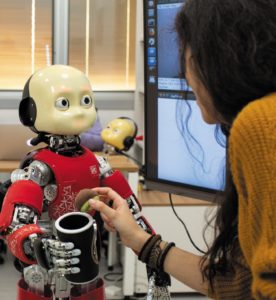

Robots, in order to be able to interact with humans in an efficient and natural way, need to have a cognitive architecture as well. Without cognition machines lack the abilities to predict, understand and adapt to their partners, preventing the establishment of long-lasting rapport. On the other hand, robots are an ideal stimulus to understand which basic skills are necessary for a cognitive social agent to make natural human-robot interaction possible.

Robots, in order to be able to interact with humans in an efficient and natural way, need to have a cognitive architecture as well. Without cognition machines lack the abilities to predict, understand and adapt to their partners, preventing the establishment of long-lasting rapport. On the other hand, robots are an ideal stimulus to understand which basic skills are necessary for a cognitive social agent to make natural human-robot interaction possible.

The implementation of cognitive architectures on robots could validate cognitive theories or give cognitive scientists inputs for new questions to investigate, while the results about human behavior during human-robot interaction studies could give robotics engineers new hints for developing more socially intelligent robots.

The goal of the workshop will be to provide a venue for researchers interested in the study of HRI, with a focus on cognition. It will be an ideal venue to discuss about the open challenges in developing cognitive architectures for HRI, such as anticipation, adaptation (both emotional and motoric), dyadic and group interaction, the human inspiration and the technological implementation of cognitive architectures by means of, among others, computer vision and machine learning techniques.

The workshop is targeted to HRI researchers who are interested in interdisciplinary approaches. In particular, in order to encourage discussion, we would like to welcome researchers from a broad range of disciplines such as robotics, neurosciences, social sciences, psychology and computer science.

References

[1] Sandini, G. & Sciutti, A. (2018). Humane Robots—from Robots with a Humanoid Body to Robots with an Anthropomorphic Mind. ACM Transactions on Human-Robot Interaction (THRI), 7(1). https://dl.acm.org/citation.cfm?id=3208954

[2] Sciutti, A., Mara, M., Tagliasco, V. & Sandini, G. (2018). Humanizing Human-Robot Interaction: On the Importance of Mutual Understanding. IEEE Technology and Society Magazine, 37(1), 22-29. http://ieeexplore.ieee.org/abstract/document/8307144/

[3] Ivaldi, S. (2018) Intelligent Human-Robot Collaboration with Prediction and Anticipation. ERCIM News, Vol. 114, Pages 9–11

[4] Ajoudani, A., Zanchettin, A.M., Ivaldi, S., Albu-Schaeffer, A., Kosuge, K. & Khatib, O. (2018) Progress and Prospects of the Human-Robot Collaboration. Autonomous Robots, Vol. 42, Issue 5, Pages 957–975

[5] Vignolo, A., Noceti, N., Rea, F., Sciutti, A., Odone, F. & Sandini, G. 2017, ‘Detecting biological motion for human-robot interaction: a link between perception and action’, Frontiers in Robotics and AI, 4. http://journal.frontiersin.org/article/10.3389/frobt.2017.00014/full

[6] Lohan, K. S., Rohlfing, K. J., Pitsch, K., Saunders, J., Lehmann, H., Nehaniv, C. L., … & Wrede, B., 2012, ‘Tutor spotter: Proposing a feature set and evaluating it in a robotic system’, International Journal of Social Robotics, 4(2), 131-146

[7] Dermy, O., Paraschos, A., Ewerton, M., Peters, J., Charpillet, F. & Ivaldi, S. (2017) Prediction of intention during interaction with iCub with Probabilistic Movement Primitives. Frontiers in Robotics & AI, 4:45, doi: 10.3389/frobt.2017.00045

[8] Ramírez, O. A. I., Varni, G., Andries, M., Chetouani, M. & Chatila, R. (2016, August). Modeling the dynamics of individual behaviors for group detection in crowds using low-level features. In 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN) (pp. 1104-1111)

Important questions that will drive the final discussion

● In robotics, where cognition is more useful and where is it not (or less)?

● How different aspects of cognition (learning, autonomy, anticipation, adaptation…) have to be investigated to yield results useful for HRI?

● Which is the minimal level of cognition needed on a robot to be able to interact with a human?

● What is possible to achieve in terms of interaction by implementing on a robot a minimal level of cognition?

● How can cognitive robotics have a direct influence on neuroscience and cognitive psychology aimed at interaction studies?